Deep learning has revolutionized the field of artificial intelligence (AI) in recent years, achieving remarkable results in various tasks, including image recognition, natural language processing, and machine translation. This guide offers a comprehensive overview of deep learning, from its fundamental concepts to its advanced applications, with brief explanations and visualizations of key algorithms.

1. Artificial Neural Networks: Building Blocks of Deep Learning

Artificial neural networks (ANNs) are the foundation of deep learning. They mimic the structure and function of the human brain, with interconnected layers of “neurons” performing simple calculations on their inputs and generating outputs.

- Neurons: Basic processing units that receive inputs, perform calculations using activation functions, and generate outputs. (Image showcasing different activation functions and their outputs)

- Layers: Groups of interconnected neurons arranged in an ordered structure. The number of layers determines the “depth” of the network.

- Weights and Biases: Parameters within the network that determine the strength of connections between neurons and adjust during training.

- Activation Functions: Introduce non-linearity to the network, allowing it to learn complex patterns. Common activation functions include sigmoid, ReLU, and Leaky ReLU.

2. Learning Algorithms: Guiding the Network

Deep learning algorithms train the network by adjusting weights and biases based on a large dataset. These algorithms help the network learn the optimal parameters for making accurate predictions.

- Gradient Descent: An iterative algorithm that updates the weights and biases in the direction of minimum loss. Imagine a ball rolling downhill, finding the point of lowest elevation, which represents the minimum loss.

- Backpropagation: Computes the gradient of the loss function with respect to weights and biases, enabling efficient updates. Think of backpropagation as tracing the path the ball took to reach the bottom of the hill, understanding how each step influenced the outcome.

3. Optimization Techniques: Fine-Tuning the Learning Process

Several techniques enhance the training process, ensuring the network learns efficiently and avoids getting stuck in local minima.

- Momentum: Helps accelerate learning by accumulating gradients over iterations and providing a “push” in the right direction.

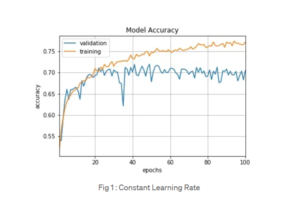

- Adaptive Learning Rates: Dynamically adjust the learning rate based on the gradient’s magnitude, preventing large swings in the parameter updates.

- Regularization: Techniques like weight decay and dropout prevent overfitting by penalizing complex models, encouraging them to generalize better to unseen data.

4. Advanced Deep Learning Concepts: Deepening Your Knowledge

As you delve deeper, you’ll encounter advanced concepts that unlock even greater capabilities.

1. Convolutional Neural Networks (CNNs): Specialized for processing images, they use filters to extract spatial features and identify patterns.

2. Recurrent Neural Networks (RNNs): Designed for sequential data like text and speech, they have internal memory that captures context and dependencies. (Image of an RNN architecture with unfolding layers)

3. Long Short-Term Memory (LSTM): A type of RNN that excels at learning long-term dependencies in sequences, enabling effective processing of long text or speech segments. (Image of an LSTM architecture with internal memory cells)

4. Attention Mechanisms: Techniques that focus on specific parts of the input data that are most relevant to the task at hand, improving performance in tasks like machine translation and text summarization.

5. Generative Adversarial Networks (GANs): Two neural networks competing against each other, where one generates data (generator) and the other tries to distinguish it from real data (discriminator). This adversarial process leads to the generation of realistic data, like images, music, and text. (Image of a GAN architecture with generator and discriminator networks)

6. Explainable AI (XAI): Techniques that help us understand why deep learning models make certain predictions, increasing transparency and trust in these models. This is crucial for ensuring fairness, accountability, and responsible AI development.

5. Deep Learning Applications: Impacting Our World

Deep learning has found its way into various applications across diverse domains:

- Computer Vision: Image recognition, object detection, image segmentation, video analysis.

- Natural Language Processing: Machine translation, text summarization, sentiment analysis, chatbot development.

- Speech Recognition and Synthesis: Speech-to-text conversion, text-to-speech generation.

- Healthcare: Medical image analysis, drug discovery, personalized medicine.

- Finance: Fraud detection, risk assessment, algorithmic trading.

- Robotics: Robot control, autonomous navigation, object manipulation.